SVN Commit Hooks For a Better Codebase

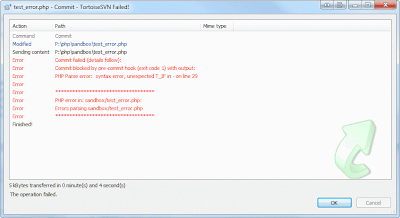

This is a cross post from the Wayfair Engineering Blog As we have mentioned before, the main source control system we use at Wayfair is SVN, with TortoiseSVN as our client. One of the things we love about SVN is the ability to add commit hooks, or checks that run when someone tries to commit a file to source control. By having a few key checks we can prevent bugs, ensure consistent coding practices, and generally have a cleaner codebase. The first commit hook we added was a PHP lint check. This means that when you try to commit a PHP file we run php -l on it from the command line, ensuring that the file has no syntax errors: Rejected commit due to a syntax error in a PHP file This might seem like a paranoid check, and you might assume that this kind of thing never happens, but with our ASP code (where we have no syntax check) we saw people committing files with invalid syntax on a semi-regular basis. Sometimes people are making the same change in a number of files and fail